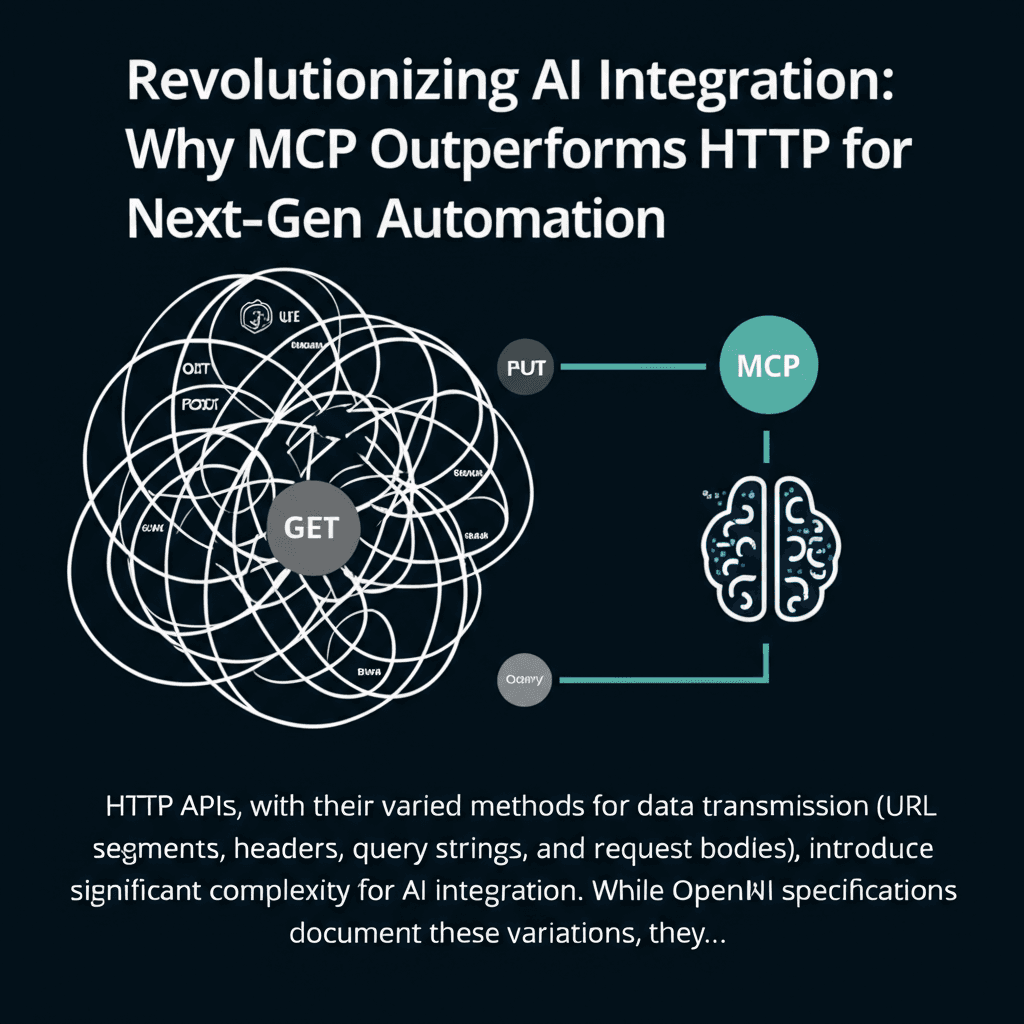

The promise of artificial intelligence (AI) and large language models (LLMs) to automate complex tasks often collides with the intricate reality of integrating with existing systems. Traditional HTTP APIs, with their varied data transmission methods (URL segments, headers, query strings, request bodies), introduce unpredictable friction points. While OpenAPI specifications attempt to document these nuances, they fall short of standardizing interactions, leading to a significant hurdle for building reliable, production-ready AI automation tools. This is where the Model Context Protocol (MCP) emerges as a transformative solution.

MCP: A Paradigm Shift for AI-Function Interaction

Unlike conventional approaches that force LLMs to construct low-level HTTP requests, MCP empowers AI systems to express declarative intent through “tool invocation.” This innovative protocol abstracts away the complexities of underlying infrastructure, allowing AI models to execute sophisticated operations using consistent commands. The actual execution is offloaded to rigorously tested code modules, making AI-driven interactions inherently more predictable, secure, and governance-friendly.

Key Advantages: MCP vs. Traditional HTTP APIs

The fundamental differences between MCP and HTTP implementations are crucial for understanding its impact on AI integration:

Deterministic Execution for Unparalleled Reliability: Traditional HTTP forces LLMs to generate raw network requests, often resulting in “hallucinatory” endpoints or malformed parameters. MCP, however, separates tool selection from execution. LLMs identify the required functionality, while discrete code packages handle input sanitization, validation, and error recovery. This division ensures production-grade safety, a feat impossible with probabilistic request building. For organizations seeking reliable AI automation, MCP minimizes errors and boosts confidence in AI outputs.

Code-Backed Architecture for Unwavering Consistency: Legacy HTTP integration relies on brittle prompting strategies where AI models “guess” appropriate URL structures and data formats, leading to cascading failures. With MCP, all execution flows through immutable server-side codebases. This guarantees outputs adhere to established business logic, providing robust audit trails, automatic retries during exceptions, and central observability across all AI-triggered operations.

OS-Native Connectivity for Seamless System-Level Integration: HTTP’s network-bound architecture introduces overheads like TLS handshakes, firewall constraints, and credential management. MCP bypasses these by operating through standard input/output channels. This enables frictionless interaction with critical system resources such as file systems, command-line tools, databases, and specialized hardware. Security inherits from the host environment’s permissions, eliminating API token sprawl while maintaining least-privilege access.

Workflow-Centric Design Over Fragmented Endpoints: Traditional REST APIs expose fragmented CRUD (Create, Read, Update, Delete) endpoints, often requiring multiple orchestrated calls to complete a task. MCP encapsulates entire workflows. For instance, a single

create_projecttool can handle authentication, resource allocation, file templating, team notifications, and audit logging in one atomic operation. This macro-level abstraction significantly reduces cognitive load for AI systems and minimizes round-trip latency.Training Efficiency Through Protocol Standardization: The inconsistent nature of REST APIs forces LLMs to “memorize” thousands of endpoint-specific peculiarities. MCP dramatically shrinks this learning curve by enforcing uniform invocation patterns across all integrations. Fine-tuned models achieve significantly higher task accuracy by mastering recurrent interaction patterns instead of recalling API idiosyncrasies, accelerating deployment timelines and reducing hallucinations.

Seamless Integration with Existing Infrastructure: MCP doesn’t demand infrastructure replacement. It serves as an orchestration layer atop legacy HTTP/REST systems. Organizations can wrap existing microservices into MCP tool definitions via simple adapters, creating hybrid environments where AI systems execute complex workflows while traditional applications continue to consume HTTP APIs. This progressive enhancement path makes adoption practical, allowing incremental implementation while preserving current investments.

The Future of AI Integration is Here

For AI engineers and enterprise architects, adopting MCP translates to fewer integration failures, reduced AI hallucination risks, significant development velocity gains, and resilient production deployments. By prioritizing declarative intent over network mechanics, MCP places business logic where it belongs: in deterministic code rather than relying on AI prompt engineering. This protocol represents a foundational shift towards truly intelligent systems that interact with our digital ecosystems as seamlessly as humans navigate physical spaces, executing diverse tasks with intuitive instructions rather than arcane technical protocols. Explore MCP to unlock the full potential of your AI initiatives.

Leave a Reply