The Essential Calculus That Powers Modern Machine Learning

From the intuitive movie recommendations on your streaming service to the split-second decisions of autonomous vehicles, modern artificial intelligence relies on fundamental mathematical concepts hidden beneath the surface. The unsung heroes? Derivatives and gradients – the powerful calculus tools that allow machines to learn from data and optimize complex systems. Let’s explore how these mathematical concepts form the foundation of machine learning algorithms and artificial intelligence advancements.

Derivatives Decoded: The Mathematics of Instantaneous Change

Imagine descending a mountainside when fog suddenly rolls in. To find the quickest path to safety, you’d instinctively feel the steepness beneath your feet. In mathematical terms, this intuitive assessment of slope mirrors the concept of derivatives. The derivative of a function at any given point quantifies its instantaneous rate of change – the mathematical equivalent of measuring the steepness of your mountain path at a precise location.

Consider the quadratic function f(x) = x². Its derivative, expressed as f'(x) or df/dx, calculates to 2x. This means:

- At x = 3: The slope is 6 units upward for each unit rightward movement

- At x = -2: The slope is -4 units downward for each unit rightward movement

Core Concepts Simplified:

- Function Foundations: Mathematical rules transforming inputs to outputs (e.g., doubling numbers →

f(x) = 2x) - Derivative Dynamics: A new function revealing the original function’s slope at every coordinate point

- Calculation Methods: Strategic rules (power rule, chain rule, product rule) efficiently determine slope functions

Why Derivatives Matter in Machine Learning

In machine learning, derivatives power the optimization of prediction models. When training algorithms:

- Models make predictions and calculate errors

- Derivatives identify how to adjust parameters to reduce errors

- The process iterates toward optimal accuracy

This mathematical feedback loop enables everything from speech recognition systems to stock market prediction models.

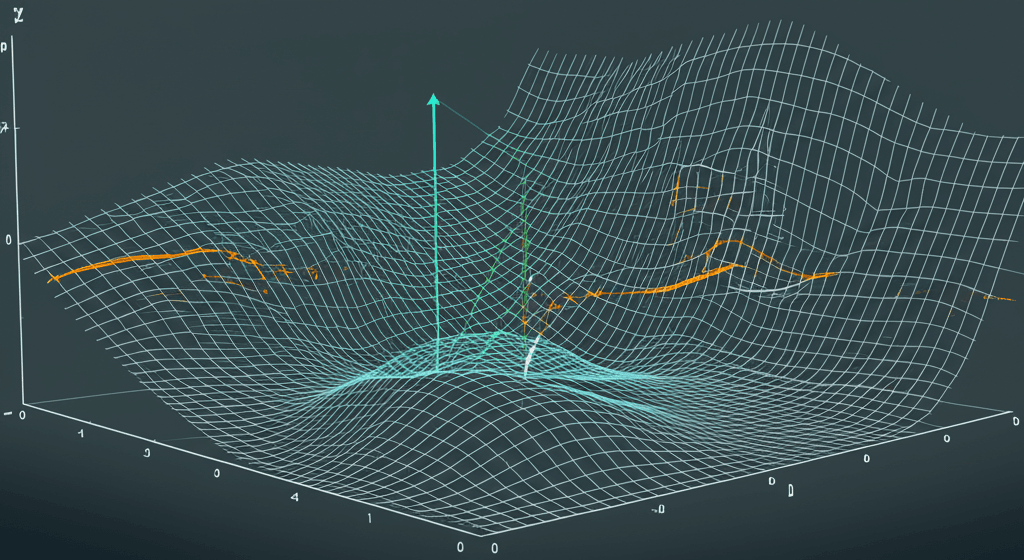

Gradients Explained: Multi-Dimensional Optimization

While derivatives measure slope in one dimension, gradients extend this concept to multidimensional spaces – the complex terrain where most machine learning problems exist. For a function like f(x, y) = x² + y², the gradient becomes a vector:

∇f(x, y) = (2x, 2y)

Key Gradient Concepts:

- Partial Derivatives: Measures change along one dimension while holding others constant

- Directional Intelligence: The gradient vector points toward the steepest ascent, while its negative points toward descent

- Optimization Blueprint: Guides parameter adjustments in neural networks and other ML models

Gradient Descent: The Machine Learning Optimization Engine

The true power of gradients emerges in gradient descent algorithms – the workhorse optimization method for training machine learning models. This process:

- Calculates current error using loss functions

- Computes gradients to identify adjustment directions

- Updates parameters (weights and biases) using learning rates

- Repeats until reaching optimal model performance

Practical Applications in Modern AI

Derivatives and gradients empower transformative technologies across industries:

- Computer Vision: Enables facial recognition systems to improve accuracy through gradient-based learning

- Natural Language Processing: Helps chatbots understand language nuances via derivative-driven parameter tuning

- Financial Forecasting: Allows algorithmic trading systems to optimize predictions based on market data gradients

- Medical Imaging: Improves diagnostic accuracy through medical AI systems trained with gradient descent

Essential Resources for Mastery

Key Courses:

- Calculus I by Khan Academy (free online)

- Machine Learning Mathematics Specialization on Coursera

- Deep Learning Fundamentals by DeepLearning.AI

As machine learning advances, understanding these fundamental calculus concepts becomes increasingly vital. From enhancing recommendation systems to developing life-saving medical AI, derivatives and gradients provide the mathematical framework that allows machines to learn from experience and improve their performance – truly embodying the intelligence behind artificial intelligence.

Leave a Reply